The fluid displacement in the cochlea causes traveling waves on the basilar membrane and leads to shearing of the hair cells at the point of amplitude maxima, which then send out nerve impulses via the organ of Corti. These reach the brain stem via the cranial nerve, only here do the signals from both ears converge and are processed in the cerebral cortex.

The Keyboard of Hearing

- the quiet radio sound that I almost always enjoy

- the passing cars in front of our house

- various other noises such as dogs barking, birds twittering and machine noise

So far, the following instruments that the brain uses have crystallized:

The level difference:

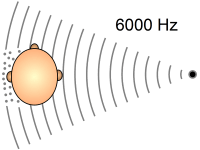

refers to the lower level and altered frequency response to the ear facing away from the sound source.

The relationship between direct and diffuse sound:

If the sound source is very close, we have a high proportion of direct sound.

Typical patterns of early reflections in a room:

The speed and angle of the reflections vary depending on the distance to the sound source, and the reflections are also used to capture the surrounding space (bathroom, church, etc.).

Minimal head movements:

Slight unconscious bearing movements of the head, which are particularly helpful for front/back localization.

As you can easily see from this list, spatial reflections are essential for localization and spatial hearing. The “law of the first wave front” (Blauert) is helpful in this context, which states that a sound source is always located in the direction from which the first sound waves reach the head. This means that the ear is able to locate a sound source based on the delay time differences even in rooms with a lot of reflections, where the level of the reflections exceeds that of the original sound. For small delay times <1ms, this law is extended by sum localization, i.e. it is added to the output sound. With increasing delay times, localization takes effect according to the law of the first wave front until echo perception occurs from approx. >40ms (depending on frequency and level).

This graphic illustrates the performance of our hearing, in which the early reflections <30ms, i.e. floor, wall and ceiling reflections, are not interpreted as separate sound events but are assigned to the original sound. In addition, the subjective experience of the sound has a fuller and more spatial impression than just the original sound.

Ideally, the reflections of the original sound should leave a “similiar”, i.e. the same auditory impression as the original sound. This can be achieved by wide-radiating systems, preferably omnidirectional sound sources, as direct radiating loudspeakers are omnidirectional in the bass range, but can only reproduce the higher frequencies in a forward direction. This means that the first sound wave reaches the listener across the entire frequency range, while the overtones that are so important for the spatial auditory impression and sound colors are only reflected incompletely, even if you are in the ideal listening triangle of the direct radiating loudspeaker. It is fatal that humans have three times more nerve cells for the higher frequencies than for the lower ones, which shows the importance of these higher-frequency reflections. How does the human organism actually react to this unnatural sound pressure, which is strongly focused in the high frequencies? With stress or relaxation? But why are we concerned with room reflections at all, when in the “ideal case” the sound recording should not be influenced by indirect sound (caused and additionally generated by the listening room) and could thus alienate the auditory impression. This requirement is only fulfilled in an anechoic chamber, otherwise the following applies: everything we hear is a product of the original sound source and the surrounding room.

We are therefore right to focus our attention on the listening room, as it is the final audio component and functions as a playback element for the spatially displayed music, similar to the projection surface of a slide projector. A mirrored screen corresponds to a reverberant room (bathroom) and a black screen to an overdamped, anechoic room.

Each of us has precise sound associations in our heads when we hear the keywords bathroom or extremely muffled room, because the ability to hear spatially is learned just like walking or speaking, which brings us to the real source of fascination: our brain.

This enormous amount of data from the cochlea is temporarily stored and pre-sorted in a kind of neuronal network so that it can then be reduced to the essentials in the working memory (short-term memory) and processed with the long-term memory. Our brain interprets all signals coming from the ear, which is why we are able to recognize different musical instruments such as double bass or violin from the high-pitched tones of a cheap transistor radio alone, or to understand what we hear through the data-reduced telephone. The left hemisphere of the brain is said to be responsible for the perception of volume, rhythm and sound duration, i.e. the analytical perception, while the right hemisphere is said to be responsible for tempo changes and the expression of pitches, i.e. the holistic perception of musical events. When listening to music, we access both hemispheres of the brain, which are shaped by musical experience (e.g. musicians - absolute hearing) and the current state of mind.

But what is actually going on in the background beyond this clear anatomical pathway, causing goose bumps, increased heart rate and breathing in some pieces of music? The limbic system is suspected. It is located centrally in the head below the cerebrum and above the brain stem. This nerve center regulates vegetative processes such as breathing, pulse, hormone and neurotransmitter release. The limbic system is a network of functional units that communicate with each other. Its heart, the hippocampus, is the central coordination point for memory functions, perception and emotional processes, which are closely interwoven. So when sounds and music hit our ears, bones and skin, they are converted into electrical signals and sent to the limbic system before we become conscious. This makes sense, because hearing is a remote sense and if danger is “reported”, it is important that the body is already prepared for this by quickly and unconsciously releasing hormones (hissing poisonous snake - immediate recoil). The early warning system of hearing therefore also appears to be directly connected to long-term memory in order to be able to make an extremely rapid adjustment to sounds without the time-consuming, conscious process (similar to a reflex). Cognitive hearing is only possible through simultaneous connection to long-term memory, where stored information about the sound of individual noises, voices, musical instruments, and so on is located. A highly positive or negative emotional association by the limbic system is important for rapid memory retention, as demonstrated by the infamous earworms that stick with you for days. "Listening to music" always evokes feelings and memories; even music playing in the background that isn't explicitly perceived triggers physical reactions. Humans have the ability to reproduce sensory perceptions from long-term memory and compare them with reality; the same sensory impressions always leave strong memories and associations. Listening to music is a constant learning process for our brain, with constantly repeated impressions being incorporated into long-term memory and, if positively associated, rewarded (dopamine, endorphins). Humans are known to be creatures of habit!

If my children grow up exclusively listening to highly compressed music via earbuds, does that mean they'll only learn to recognize this type of music as music? The converse would be that they'd no longer be able to recognize and enjoy live music and concerts as music, but would perceive them as something foreign and new.

With this insight, I can interpret the statement of an acquaintance after a wonderful concert evening: "The orchestra was really good, but who actually turned the treble up so far?" Apparently, he had conditioned his hearing with direct-firing, focused speakers to the point that he could only accept this exaggerated auditory impression as music. It's incomprehensible to me how one can hear a spatial or stage sound with highly focused speakers. I always hear two sound sources! Buzzwords like loudness wars, digitally remastered, MP3, etc., as well as the fact that I'm virtually helplessly at the mercy of sound engineers, ideologies of playback technology, and thus the conditioning and training of my hearing, inspire me to act and invest meticulous work in my music system and listening room.

The art of music reproduction in one's own home consists in creating an authentic illusion of reality in order to enjoy the experience of music in a completely individual way. This allows me to explore the full range of listening possibilities.

Anette Duevel